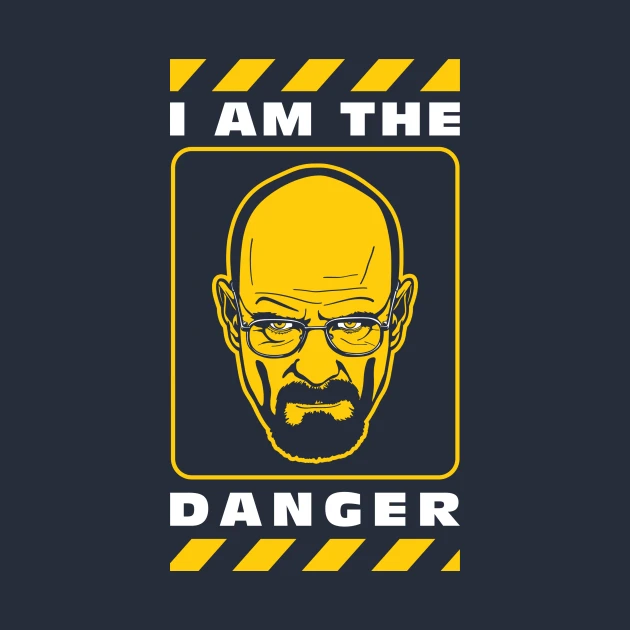

” I am the Danger” Said the AI Voiceover tool not Walter White!

I recently received the usual newsletter from GFTB and it had a link about an article titled ” AI Voiceover Explained”. I was curious to check it out and I found that the article was initially published in September 2022. The Article is very informative, shows a great experience of the writer on the matter, structured in an interesting way and having many information that I didn’t know before. I will share the link at the end of this post for any curious minds out there. But the very interesting part that I wanted to share with you today came late in the article when it started listing the different types of AI Voiceover jobs in the market with the pros and cons of each type. If you do a very quick read like I did, you will find out that almost every type of these jobs carries the following risk “ The company might sell your voice in the future which you may have no control over”. The only type that was claimed to not having this kind of risk is the type used for Training Model AI jobs.

This is frightening in every possible way! Then I told myself, I totally understand the importance of contracts at these jobs, but what if something wrong went out, How would I know? The article mentions that it is currently very difficult to monitor such breaches. The second thing that I want to say without getting into politics is that I don’t believe that many of my Arab colleagues can really get into a legal action easily to sue a company and expect to get something out of it.

The article continues to scare with the following sentence regarding the prospective AI Voice model section “You cannot decide or vote on where this is sold, whether this is to a reputable company, or to be the voice of a porn-site or a sex-doll (I do not jest: both of which we have had reports of happening).

Can you believe this?!

Coincidentally I was checking today the terms and conditions of Elevenlabs website and I surprisingly found that if you decided to lend them your voice to earn passive income as they claim, they have the following clause

” If you enable the “Live Moderation” setting, we will use tools to check whether requests to use your voice model contain text that belongs to a number of prohibited categories (such as hate/threatening content, self-harm, etc.). These tools are intended to stop users from applying your voice model to text belonging to a prohibited category, but they may not catch every instance of prohibited content. Moderation may introduce extra latency for users using the voice model, which could lead to lower usage and lower rewards.”

So they are simply advising you to somehow lower the moderation for a higher probability of usage to your voice regardless of the category but at the same time If you decided to increase the moderation level, there is still a chance for error and that they can’t catch every single instance.

Frightening, right?

I know that I might be late posting about this after a huge buzz that I might have missed but this was alarming and made me ask myself ” why would anyone do this to him/herself?

It is not really an issue of AI getting any of our jobs rather than AI exploiting your voice in every possible, being the danger itself! I thought that I needed to share this with all of you to hear your thoughts, maybe I missed something or maybe there is another explanation that I didn’t realize. Here is the link for the article I mentioned above AI Voice Over – Explained (gravyforthebrain.com)

So any thoughts my friends